Bob Sutton (author of the excellent Hard Facts on evidence-based management, and other books) has had a few great posts recently on intuition, self-knowledge and cognitive bias (among other things):

- In Flawed Self-Evaluations he talks about people’s tendencies to overestimate their own knowledge or skill, and that the less they know about an area, the more they overestimate their abilities.

- In Intuition vs. Data-Drive Decision-Making he addresses the question about when you should follow intuition and when you should base your decisions on concrete evidence, and about the kind of cognitive biases (confirmation bias and the fallacy of centrality) that can cause intuition to be wrong.

While not directly tied to instructional design, cognitive bias is inevitably going to come into play whenever a learning experience requires a change of attitude or behavior, or a the acquisition of very foreign information or ideas.

Also, the List of Cognitive Biases is one of the most entertaining wikipedia pages going:

http://en.wikipedia.org/wiki/List_of_cognitive_biases

While Sutton’s discussion of these issues is insightful and balanced, in other venues the discussion of cognitive biases seems to have the persistent theme “Why are people so dumb?”

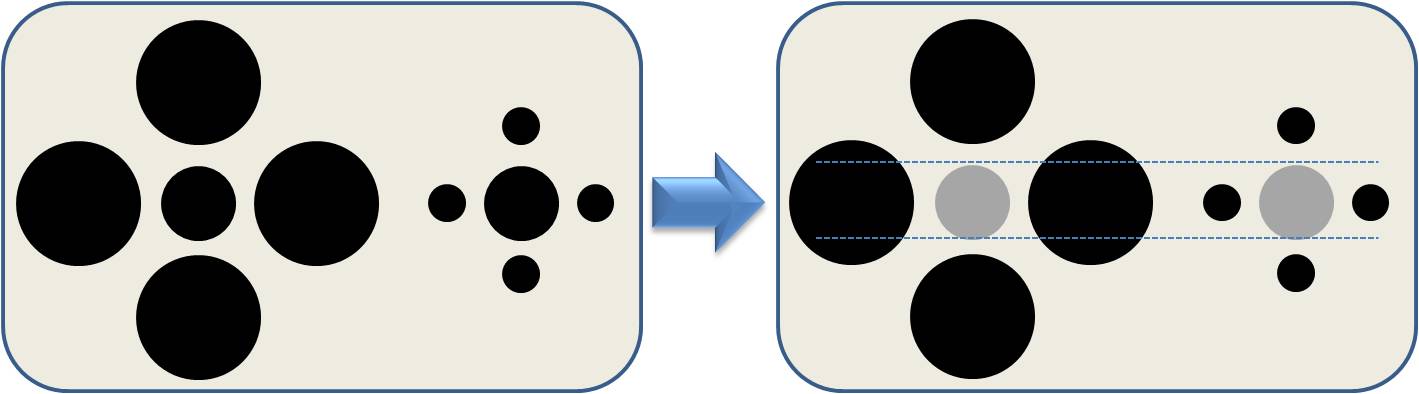

I’ve been considering lately the notion that most cognitive biases have roots in functional behaviors. In the same way that optical illusions are interesting not just because they are wacky or mind-bending, but because they reveal things to us about how the brain is adapting or interpreting the visual world, cognitive distortions are interesting in that they make explicit and visible the cognitive shorthand that we are using to interpret the world all the time.

I think it’s primarily an efficiency of the brain — if we couldn’t automate some (or even the majority) of our decision-making, we’d never be able to get things done with any efficiency (similar to the phenomena that Antonio Damasio described around the difficulty of decision making in the absence of emotion).

The disconnect shows up when those automated patterns of thinking become calcified or lazy, or when the thought pattern is so ingrained that it’s completely transparent to the individual.

Similar to the idea from evolutionary psychology that an attraction to foods with high caloric density (sugar or fats) once conveyed an evolutionary advantage, but now work against us in our food-abundant societies, it seems like many cognitive biases have functional roots when applied in the right context. Further, it might be useful to identify those contexts, to be better able to understand where those same behaviors are then misapplied.

With a number of cognitive biases, it’s fairly easy to hypothesize a context where that behavior could be valuable (the fallacy of centrality, for example — in most cases, people are probably *right* to think that if something was going on, they’d know about it, and if they couldn’t use this mental shorthand, they would be hopelessly mired in detail or wild goose chases).

I’m particularly intrigued by the cognitive distortion described in the Flawed Self-Evaluations post. The prevalence of the inflated self-view (majority of people believing that they are above-average intelligence or better than average drivers, etc. – all statistical impossibilities) makes me wonder if there isn’t some functional basis for those beliefs (although it could be as simple as needing to protect one’s self-esteem, or statistical illiteracy).

The question remains — what to do about it? Evidence-based management is definitely one key tool to check against intuition or habit. Sutton’s description of people who “act on their beliefs, while doubting what they know” is very useful. But because the behaviors are so automated, it becomes particular difficult for people to recognize and question them. It might be useful to have some predefined criteria that triggers specific analytical activities to guard against it. Some people (as Sutton describes) seem to do it naturally, but the rest of us may need to define implementation intentions for our own behavior (If I find myself doing X, I will sit down and do Y).

In instructional settings, it’s useful to consider what biases might exist already in your audience, and to keep an eye out for evidence that these biases are occurring. It can also be useful to make learners aware of their own biases, and teach them skills that allow them to look out for them (I found this nifty confirmation bias game when I was looking for resources for this post).

But I think one of the most useful things to keep in mind is that there are real reasons why this biases exist, and that it’s not obtuseness or stubbornness when you encounter them in your learners.

After all, which of us haven’t had the experience where you were absolutely certain you were right, had no reservations about expressing your *rightness*, and then found later you were…um…yeah…completely wrong?

Added note: Related material about Dysrationalia turned up just today here and here.

Another added note: There’s a great radiolab episode about the benefits of self-deception here: Radiolab-Lying to Ourselves and the research they cite: Self-deception and Its Relationship to Success in Competition

You’re right to highlight this area – it’s an interesting one.

I think in any discussion of this area for IDs/trainers, you have to chuck in empathy, sympathy (and, its evil twin, antipathy) and logical fallacy too. Along with ignorance, these are all components of being dumb and spotting dumbness. The differences between them are subtle and far-reaching.

I’d also hazard a guess that, if cognitive biases are functional in origin, us instructors don’t so much have to forewarn learners about their existence as guard them against ours.

If learners are ‘functioning’ in contexts we’ve created, then they’ll be responding to stimuli we’ve set up.

Couple this with the fact that we’re the ones persuading and simplifying (often while ‘working for the man’) – all functions more likely to result in the manifestation of cognitive bias than their counterparts – then I’d say the onus is definitely on us to exercise caution

Here’s some links to some more stuff you might find interesting:

Stuart Sutherland wrote a great (and now fairly cheap) book called Irrationality. Mine’s dog-eared and bejotted.

Thinking Straight from University of Tennessee

Fallacies and pedagogy (and loads more) from the Internet Encylopaedia of Philosophy

Taxonomy of Logical Fallacies. I love a good taxonomy.

very nice information I am impressed

keep up the good work